The crawl budget is a crucial aspect that you must consider for SEO. This article explains the fundamentals of the crawl budget and how to optimize it to get the most out of it.

Table of Contents

What is crawl budget?

Let’s begin with the definition of the crawl. Crawl is the process by which search engines like Google send their bots (also known as spiders or crawlers) to find and scan website content. This includes images, videos, PDFs, etc.

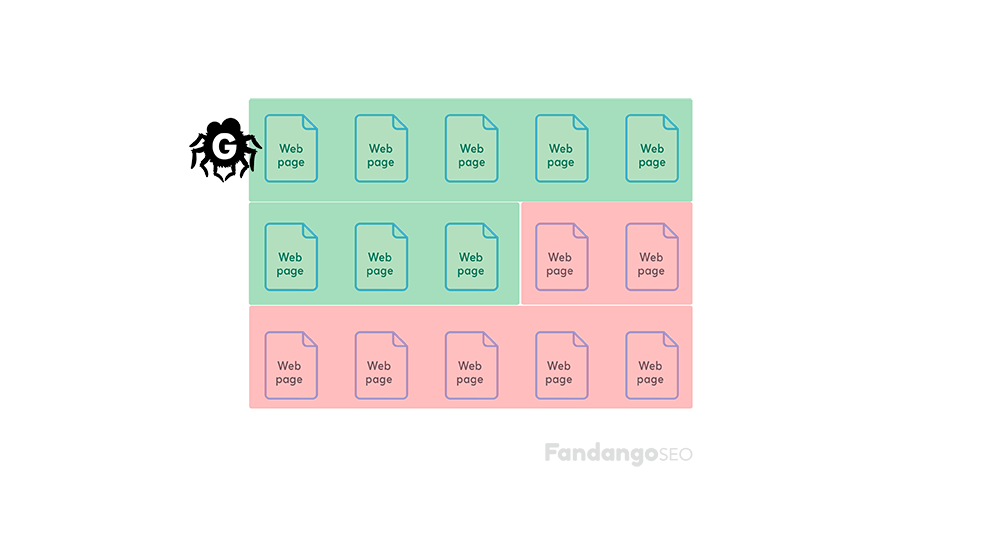

There are billions of web pages. This number makes it somehow unworkable for Googlebot to crawl them each second, each day. Doing so would lead to an extremely high amount of bandwidth consumed online. This would, in turn, lead to slower-performing websites. To eliminate this situation, Google portions out a crawl budget for every website. The budget allocated determines the number of times Googlebot crawls the website as it looks for pages to index.

A Googlebot, on the other hand, is an automated proxy that crawls around a site as it looks for pages that need to be added to its index. It is something that acts like a digital web surfer. Knowing about Googlebot and how it works is just a step to helping you understand the idea of crawl budgets for the SEO process.

How to measure your crawl budget

The best way to measure the crawl budget is by performing log analysis. This is a vital practice to see how Google behaves on your website and on which pages it spends the crawl budget.

Using a Log Analyzer like FandangoSEO, you can instantly check the average hits (Googlebot visits) to your site per day and hour or evaluate how your crawl budget is distributed across different page types. Likewise, you’ll be able to see the search engine crawls in real-time to confirm if your most recent content is found.

Why is crawl rate limit important

This concept has some differences compared to the crawl budget. The crawl rate limit defines the number of simultaneous connections that Googlebot uses to crawl sites and the time it takes before fetching another page. You should note that Google focuses on the user experience. The Googlebot, therefore, uses the crawl rate limit. This limit prevents sites from being overrun by the automated agents to the extent that the human users find it hard to load a site on their web browsers.

Some factors will affect the crawl rate. Some of them include:

- Website speed – If websites respond quickly to Googlebot, then Google will extend the crawl limit rate. Google will then reduce the crawl rate for other sluggish websites.

- Settings in the Search Console – A web developer or architect can set the crawl limits through the Search Console. If a webmaster thinks that Google is over-crawling on their server, they might decrease the crawl rate, but they can’t increase it.

Note that a healthy crawl rate can get the pages indexed faster, but a higher crawl rate is not a ranking factor.

The crawl demand

The crawl rate limit may fail to be reached, but there will still be reduced activity from Google if the demand for indexing is not there. This reduction in activity from the Googlebot is called the reduction in crawl demand. The two factors that significantly determine the crawl rate demand are:

- Popularity– The URLs that are popular on the Internet are crawled frequently to keep them always fresh in the Google index.

- Staleness– Google systems usually try to prevent URLs from going stale in its index.

Besides, site-wide incidents such as site moves may activate an increase in the crawl demand. This happens to reindex the site content in the new URLs.

What factors affect the crawl budget for SEO?

A crawl budget combines the crawl demand and crawl rate. This combination is what Google defines as the total number of URLs that Googlebot is willing and able to crawl. Google has identified the exact factors which affect the crawl budget. Here is the list of those factors:

- URL parameters – This is mostly the case that the base URL added with parameters returns the same page. This kind of setup can lead to several unique URLs counting towards a crawl budget even though those URLs still return the same page.

- Soft error pages – These error pages also have an impact on the crawl budget. However, they are also reported in Search Console.

- Duplicate content – At times, URLs can be unique without request parameters, but still return the same web content.

- Hacked pages – Hacked sites usually have their crawl budget limited.

- Low-quality content – Google will likely limit the crawl budget for sites that suffer from poor quality.

- Endless pagination– Sites with boundless links will find that Googlebot spends much of its crawl budget on the links that might not be important.

How to optimize your crawl budget

Fortunately, there are simple guidelines that can be put into practice to optimize your Google crawl budget. Especially the ones we are going to see below:

Check that your top pages are crawlable

It is vital to ensure that .htaccess and robots.txt do not block your most important pages and that bots have no problems accessing CSS files and Javascript. You should also take care of blocking content that should not be displayed in the SERP. We recommend using an SEO Crawler that allows you to detect any crawling issues easily.

Give a simple structure to your website

Your site should have a home page, categories or tags, and content pages. Include internal links to establish a site hierarchy and make it easy for crawlers to find the pages. Again, we suggest using an SEO tool that allows you to see your site structure at a glance.

Don’t stop updating your XML sitemap

Updating the XML sitemap is essential, as this helps bots understand where the internal links on the site are going. This practice also allows Google to index and rank new pages more quickly.

Avoid redirect chains

It is not at all advisable for your site to have 301 and 302 redirects. You may hardly notice if you occasionally leave one or two redirects, but you should not let the number keep growing.

Manage your URL parameters properly

In case your content management system generates a large number of dynamic URLs, they might lead to a single page. But search engines will treat them separately, spending your crawling budget unnecessarily. So to avoid duplicate content problems, you must manage your URL parameters properly.

Eliminate 404 and 410 error pages

These types of pages waste your crawl budget, and they can also damage the user experience. Hence the importance of correcting the 4xx and 5xx status codes.

Reduce site loading speed

The site’s speed is of fundamental importance to improve the crawl budget and to make your website rank high. Bots crawl much faster those pages that load more quickly. It is also a critical factor in improving the user experience, and therefore, the page’s positioning.

Use feeds to your advantage

Google says that feeds are a way for large and small websites to distribute content beyond visitors who turn to browsers. They allow subscriptions to regular updates delivered automatically via a web portal, a newsreader, and sometimes even an old email.

These feeds are very useful to crawlers, as they are among the most visited sites by search engine bots.

Include internal links pointing to the pages with less traffic

There is no doubt that internal linking is a great SEO strategy. It improves navigation, distributes page authority, and increases user engagement. But it’s also a good tactic for improving the crawl budget, as inbound links lead the way for the crawler as it navigates the site.

Build external links

Studies have shown a strong correlation between the number of times spiders pass by a website and the number of outbound links it contains.

How to increase your crawl budget

Matt Cutts, who was head of Google’s Web Spam team, explained this topic by talking about the relationship between crawl budget and authority. He argued that the number of crawled pages is more or less proportional to their PageRank.

As Cutts explained, if there are many incoming links on the root page, they get crawled. Then this root page can point to others, which will get PageRank, and will also be crawled. But PageRank will decrease as you go deeper into the site.

Well, although Google now publicly updates PageRank values, this parameter is still present in the search engine’s algorithms. Let’s talk about page authority instead of PageRank: the conclusion is that it is closely related to the crawl budget.

Thus, it is clear that you must increase the site’s authority to get a higher crawl budget. This is largely achieved with more external links.